Have you ever thought about the concept of an AI system being stuck in a time loop, persistently accessing information from last year and remaining unaware of the breaking news happening today?

Say hi to RAG, the latest AI upgrade that combines real-time information retrieval and advanced language generation. Pairing RAG with your Large Language Model (LLM) is like granting it access to an abundance of fresh, external knowledge, enhancing its capabilities.

What Is This RAG thing

Retrieval Augmented Generation enhances LLMs by providing them with access to a rich, dynamic store of external information. Instead of relying solely on a static training set (which can age quickly), RAG adds an on-demand retrieval step to access data in real time.

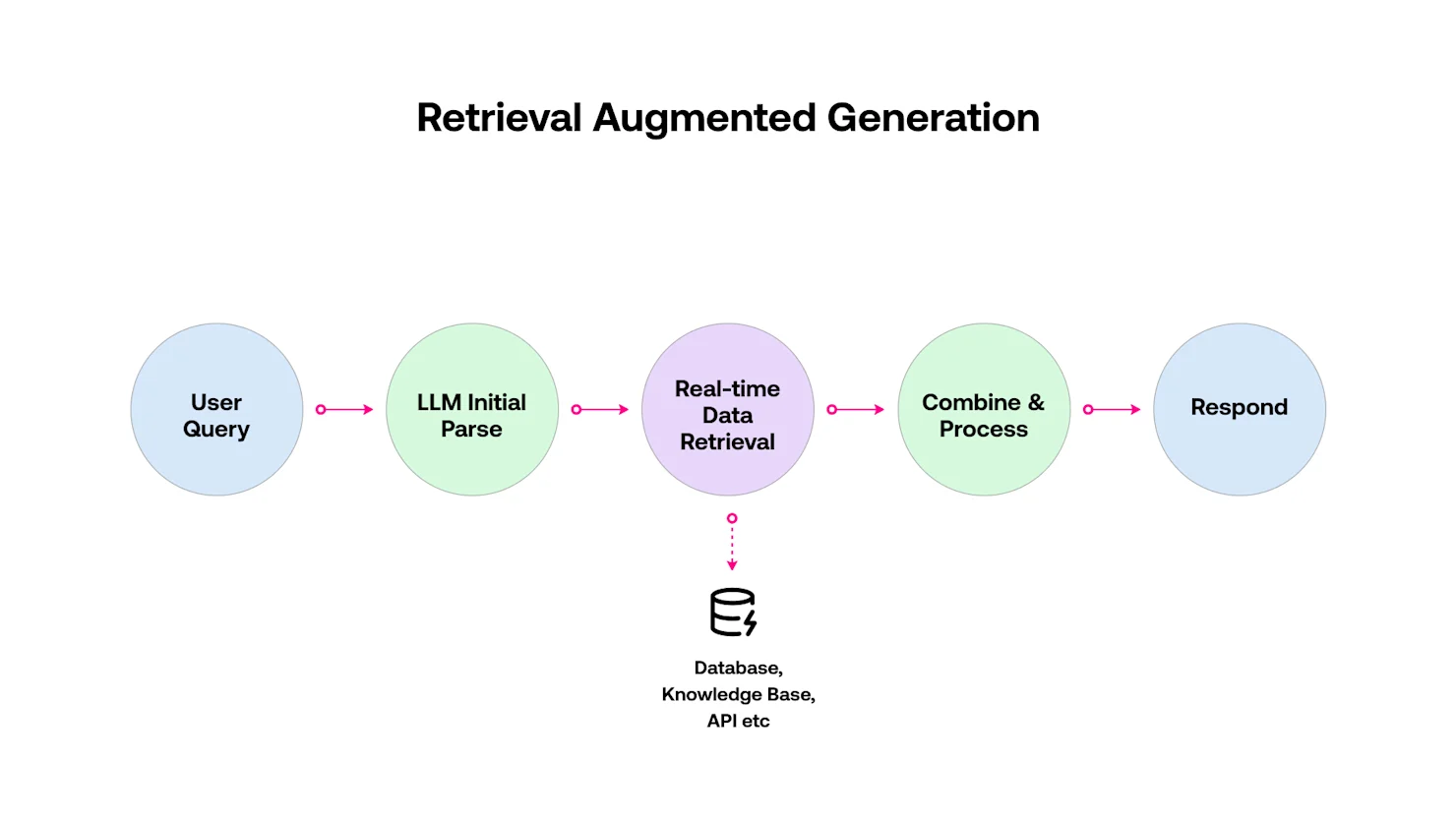

The simplified process looks something like this:

Explain the request

The AI analyses the question you ask (for example, "What is the most recent information on our product pricing?") and determines the data it needsGet relevant data

Scan external sources like company databases, knowledge bases, or APIs to fetch the most up-to-date and relevant information or facts.Combine and respond

The LLM synthesises the fresh data to produce an up-to-date answer enriched with context. It doesn't just repeat information, but actually integrates real-time data into its answers.

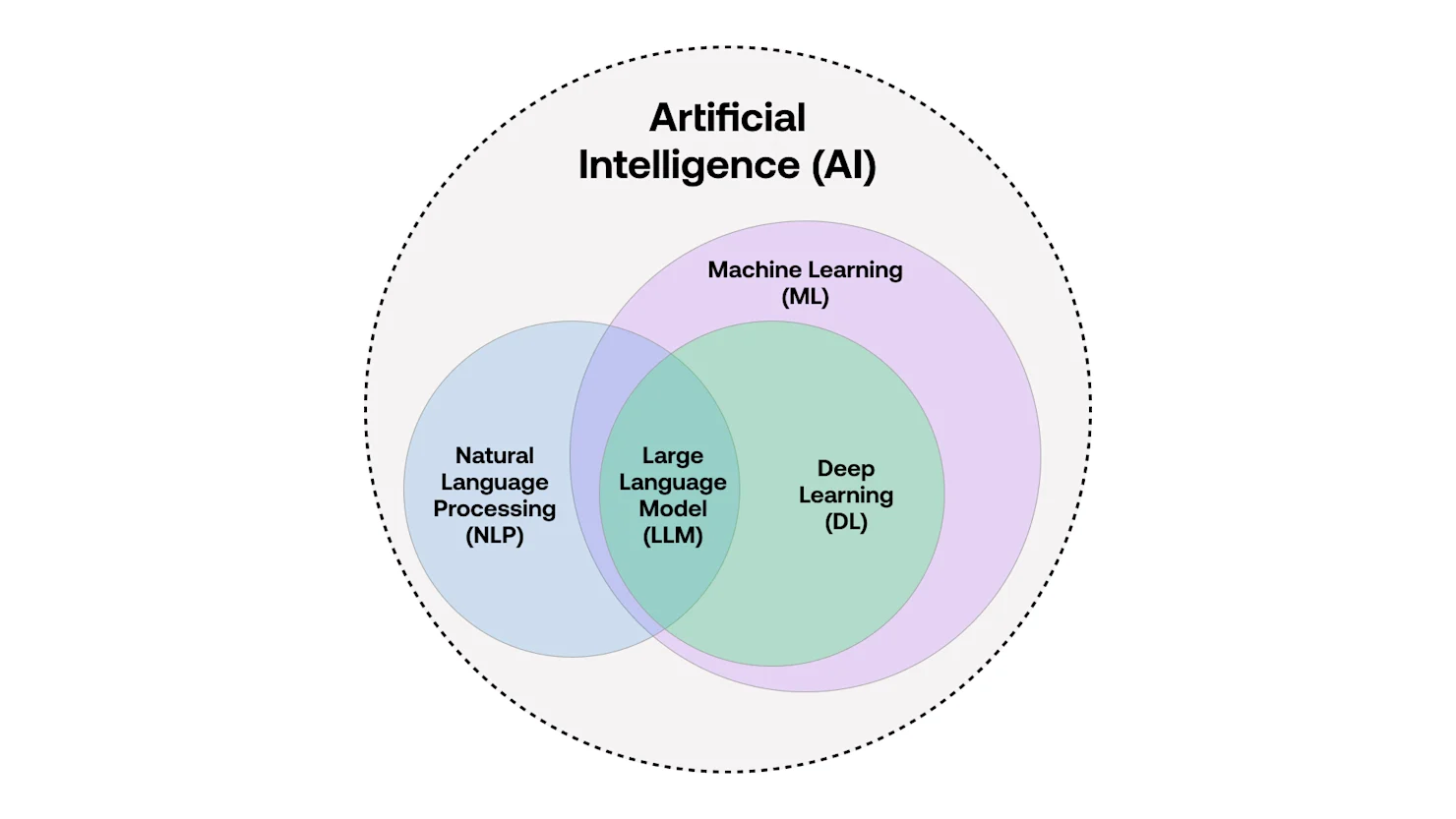

Where It Fits in the AI Family Tree

Machine Learning is like the muscle, LLMs are the geniuses, and RAG is the super librarian who gets the right info at the right time. It raises AI's status from a "decent predictor" to a "constant source of knowledge," connecting past, unchanging training data to the dynamic, ever changing, real world.

What's so different about it?

No More Outdated Replies - With RAG, your AI will always be up-to-date on the latest product specs, policy updates, and breaking news.

Factual responses - RAG decreases the occurrence of incorrect statements known as "hallucinations" by relying on actual documents or databases.

Efficiency on a large scale. - Enterprises can merge vast knowledge repositories to allow their AI assistant to retrieve the newest data efficiently. A game-changer for customer service, sales, and everything else in between.

Context-Aware Answers - RAG tailors responses to each query’s unique context, ensuring your AI doesn’t just sound knowledgeable—it genuinely is knowledgeable.

How It Works

This diagram outlines the journey of a user query as it moves through a language model, starts a search for the latest information, and ultimately merges all components in its response.

User Query:

The end user or application sends request. E.g., “What is the current product pricing?”LLM Initial Parse:

The LLM reads the question and decides if it needs more details.Real-time Data Retrieval:

The system fetches relevant data from external sources, like a knowledge base, database, or API, reflecting the most recent updates.Combine & Process:

The LLM merges the fetched information with its own understanding, ensuring the response is both contextually rich and up to date.Respond:

The user receives an answer that’s grounded in real-time or current data, rather than solely relying on the LLM’s static training set.

Common Use Cases

Customer Support Assistants - Ask about a newly launched feature or the latest refund policy, and RAG fetches the most current documentation to generate an instant, accurate reply.

Sales & Marketing - Need the newest competitor data or updated pricing? RAG queries your databases and market info in real time, ensuring your pitches and campaigns don’t rely on stale facts.

Research & Reporting - Whether you’re sifting through academic papers or corporate data, RAG hunts for the latest references, forming an evidence-based summary that’s actually current.

Compliance & Legal - Regulations aren’t static. RAG ensures you’re always referencing the most recent amendments, guidelines, or case law, reducing compliance missteps.

Real Companies Using RAG

Microsoft (Bing Chat) - Integrated RAG into Bing’s AI chat feature to reference real-time web content. If you ask about today’s headlines, Bing Chat fetches the newest articles before responding.

Salesforce (Einstein GPT) - Salesforce taps RAG to pull up real-time customer data from your CRM, letting Einstein GPT craft highly personalised insights on-the-fly.

BloombergGPT - In finance, real-time accuracy is everything. Bloomberg’s specific LLM program uses data collection to stay updated on changing market data, helping traders access the latest information.

Thomson Reuters - With vast legal and regulatory archives to keep track of, Thomson Reuters uses retrieval-based methods so legal pros can access the freshest case law and amendments each time they query.

ServiceNow - Well-known for its workflow automation and uses virtual agents for data retrieval. So, you get the newest internal info, such as support tickets or technical docs, with no need to update anything by hand.

Limitations of RAG

Despite its strength, RAG does present challenges:

Data Quality and Reliability - If your external sources are outdated, biased, or contain errors, the AI’s response can still go off course. It's like the saying goes, "Garbage In Garbage Out."

Complex Infrastructure - You’ll need robust systems for data storage, indexing, and fast querying, plus the technical overhead to keep it all running smoothly.

Security and Privacy - Real-time data retrieval means potential exposure of sensitive info. Proper access controls and encryption on any connected systems are a must.

Maintenance Overhead - Retrieval pipelines need ongoing monitoring. If an API breaks or a source changes, your AI may not find the info it needs.

Potential for Information Overload - Pulling from multiple databases can lead to conflicting or excessive data. Proper filtering and ranking are crucial to providing concise, accurate answers.

Costs Can Add Up - Maintaining databases, APIs, and infrastructure for real-time retrieval may require significant investments in both technology and talent. Plus, frequent or complex queries can drive up usage fees in cloud-based environments like AWS or Azure.

What are you waiting for?

RAG can totally change how AI can enable your organisation to streamline, optimise and create more efficient, and accurate, workflows. Instead of being limited to static knowledge gained during training, your AI can now navigate through real-time data streams to deliver relevant, timely, and accurate responses.

Are you prepared to use the potential of RAG in your workflows? It's possible that this is the exact tool you've been searching for - a powerful instrument like Thor’s hammer that can obliterate misinformation, safeguard your AI plans for the future, and pave the way for a fresh era of dynamic, contextually rich discussions.